A long awaited feature for Attunement is that of non-human competitors.

One of the biggest risks for Attunement is that of being a ‘multiplayer only’ game.

It is a dangerous siren song to make a multiplayer game. It’s fun at conventiosn but the moment a player has no friends nearby, you have a useless piece of software.

So lets dig into a big chunk of how Bots & AI in Attunement work!

ActorRequestData

When a bot is created, it goes through the same pipeline as when a player is created. It adds a Shaper to the scene.

But the process for doing this requires many answers to specific questions.

- Where should it spawn – Random player spawn, random enemy spawn, specific point, a spawn point with a particular trait, near an existing player’s raycast?

- What abilities should the new shaper have? (Automatically configures outfits/trinkets)

- Should it be a player-controlled character? A bot? A target dummy? A temporary summon?

- What team/allegiance should it have?

- Should it have any Rituals (which modify the abilities it has)?

- Should it have any Status effects (like 3 seconds of invulnerability on spawn)?

- What movement paradigm should it use (2D platforming, 3D flying)?

- How much Max Health should it have?

- What special bot considerations should be made?

- Should it navigate? Toward the player, towards a destination, patrolling, orbiting the player?

- Should it be immobile & immune to knockback?

- Should it make decisions & use abilities?

- Should it have UI and have a split screen viewport?

- How fast should it make decisions?

- Should it have a positional offset?

- Should it have gravity enabled?

This is what the ActorRequestData object is for. When a programmer decides to make a bot, they use a chaining pattern to configure what parameters should be set for the bot. Each deviation from default behavior includes one or two function calls.

The result is highly configurable bots with very little work per new bot.

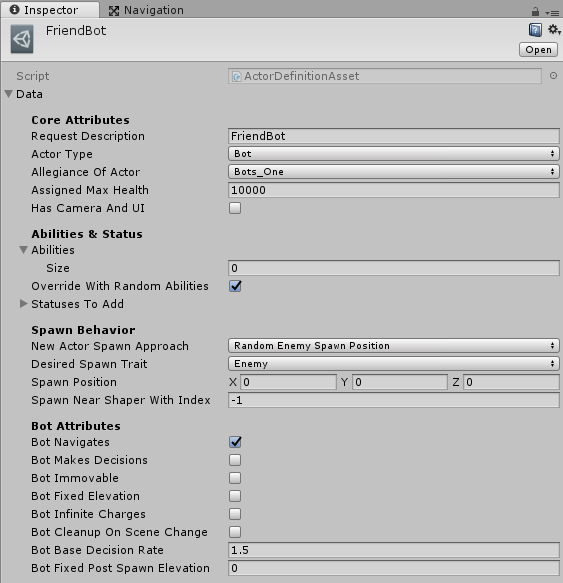

ActorDefinitionAsset

A further improvement can be made with ActorDefintionAsset (a class that inherits from ScriptableObject) which holds a ActorRequestData reference.

This class can be used to create in-project assets which allow for easy editor configuration rather than scripting.

Decision Making

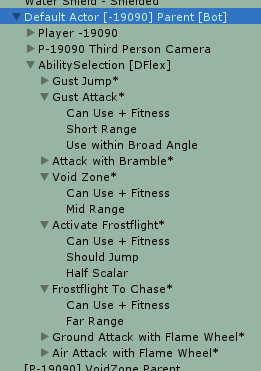

Bots need to make decisions for what abilities they use. I use a Utility Theory AI system called DecisionFlex to handle the actual systematic approach. I have bots pay attention to values like distance to the player, the angle of the target to the center of their field of view, difference in vertical height, clear line of sight, etc.

Each ability can have multiple different use cases (see right-hand image: Gust Jump and Gust Attack are two different actions). These action cases can even respect internal ability state of “Only activate this use case if the ability is not active”.

I can control the decision rate of bots, as well as how it might want to be different. One small feature is that the bots make decisions at different rates depending on how endangered they feel (at low health, the have less time between actions, making them more responsive and dangerous)

There is still a number of improvements to make, but the initial approach works decently.

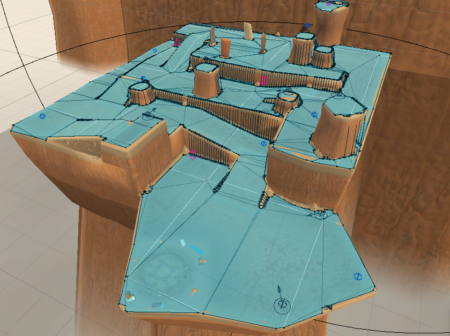

Pathfinding

When a bot is hit with a knockback move, it may remove their grounded state, which temporarily disables the NavMeshAgent until they touch ground again. This allows for a knockback move to decouple a NavMeshAgent from the NavMesh, but it doesn’t allow them to continue pathfinding while airborne.

However, there are many other pathfinding challenges

- Destination is not reachable from their current NavMesh location

- Gracefully handling moving down OffMeshLinks (and falling organically, rather than snapping down)

- Short circuiting a long ground path by jumping (allowing vector math navigation take over)

- Handling being knocked off the stage and not dying without a struggle (negated by providing [Z Forward] while airborne)

- Respecting player knockback so as to not feel cheap (accomplished by not providing [Z Forward] when recently hit by knockback

The initial implementation works, but there are many heuristics and knobs to add to improve the airborne behavior.

What Bots To Make?

Here’s an earlier image with many of the bots we’ve made. A few aren’t shown.

Target Dummy

Target dummy isn’t really a bot, but more of an unacting actor. It has no UI, makes no decisions, cant navigate. It doesn’t even have health.

You can use abilities on it which makes it useful for testing.

Primary Bots

- Gust – Fire Leap – Sand Sculpt – Geyser – Rush

Each of these bots utilizes a primary ability. These bots utilize some heuristics for when to jump and can reach normally unreachable parts of the map as well as more quickly traverse the map.

FriendBot, FlightBot, OrbitBot

Each of these bots will navigate but hardly use any abilities. They will navigate towards (or nearby) the player. These bots serve two testing purposes: evaluating navigation & testing abilities.

VoidZoneBot, StarfallBot, AiryBot, RockThrowBot

All four of these bots are renditions of the same idea.

Each uses a medium or long range ability. Each is immobile and immune to movement effects. They also exclusively spawn on high elevation enemy spawn points on the map.

These bots behave like dangerous turrets that must be recognized and defeated (or suffer the consequences).

RandomBot & Other Bots

Given that all abilities know of their Considerations, this allows DecisionFlex to determine when an ability should be used. Therefore, a bot with a random assortment of abilities is a decent foe if it constantly moves towards the player. RandomBot serves as a decent initial broad pool of different enemies as well as creating novel testing situations for rooting out bugs.

As for the other unmentioned bots, most have one or two abilities that portray a gimmick or particular synergy. As bots are refined, I’ll go into this topic more.

Future Improvements

There is a laundry list of improvements to make:

Platforming & Navigation

- Adding more ‘Should Probably Jump’ heuristics

- [Done] When destination is unreachable

- [Done] When destination is more Above than Away

- When current NavPath is much longer than vector distance

- When in a hazard

- When directly above a pit or hazard

- When the bot wants to be above their destination

- Improve Airborne Enemy behavior

- [Done] Adding 3D flight movement for air control

- [Done] Bots ascending and descending based on elevation difference from goal

- Airborne enemies can maintain a target elevation above the ground

- Can define flying bots that always have 3D flight enabled

- Better airborne vector math (to do things like avoid walls, strafe, etc)

- Bots navigating towards map-specific resources (health/cooldown tokens)

- Add flocking behaviors for advanced navigation (flanking, orbiting, patrolling, ambushing, evading the player)

Conveyance

- Bot UI/Health indicators so the player can better understand the tide of battle

- Unique visuals for different bots (to make them stand out more)

- Bots signalling intentions & their current state

- With dialogue speech bubbles over the bot’s head

- With exaggerated animations

- With audio barks or voiceover lines

Gameplay & Balance

- Gameplay systems to add N bots over time and remove them as they are defeated

- Better difficulty control for bots

- Variable ability decision rates (how frequently they decide on the optimal ability to use)

- Decision delay rates (delaying the execution of ability usage decision)

- Ability to define max health, damage dealt multiplier (on a broad scale)

- Controls for navigation speed & platforming capability

- Changing the previous win mechanism to work with bots

- Gathering data on players fighting bots to evaluate which bots & ability combinations are most effective.

Closing

Overall, I’m very happy with the implementation so far.

As for next topics of focus: Game Modes, UI tweaks, animation changes and character controller improvements.

I’ll be showing the game at iFEST on May 5th (7 days from posting). It’ll probably feature a revised [Vs Bots] or perhaps the standard 1-on-1 combat.

As for blog posts, I’m still going to write a design focus post, but making informative visuals is a harder challenge.

Life Update

In an unrelated life update, I’ve started a new job doing contract work on Microsoft’s HoloLens, so Attunement will be getting a lesser share of my personal time. I’ll still be working on it, but it will be slower going.

As always, thanks for reading!